Advancements in graphics processing technology have revolutionized the computing landscape, offering unparalleled advantages. The latest generation of GPU (graphics processing units) introduces a plethora of opportunities in gaming, content creation, machine learning, and beyond.

A GPU (graphics processing unit) is a specialized electronic circuit initially created to expedite computer graphics and image processing tasks. Originally, GPUs were primarily implemented on video cards or integrated into various devices such as motherboards, mobile phones, personal computers, workstations, and gaming consoles.

However, beyond their intended purpose, GPUs have demonstrated utility in handling non-graphical computations, particularly those characterized by parallel processing requirements. This parallel structure renders GPUs effective in tackling tasks involving embarrassingly parallel problems.

Beyond graphics and parallel computing, GPUs find applications in diverse fields, including the training of neural networks and cryptocurrency mining.

What is a GPU (Graphics Processing Unit)?

At its core, a GPU — is a specialized electronic circuit designed to rapidly manipulate and alter memory to accelerate the creation of images in a frame buffer intended for output to a display device.

Originally developed for rendering high-quality graphics in video games, GPUs have evolved to become indispensable components in a wide array of computing tasks beyond just graphics rendering.

What Does a GPU Do?

In the early days of computing, the Central Processing Unit (CPU) bore the responsibility of executing calculations essential for graphics applications, such as the rendering of both 2D and 3D images, animations, and videos.

However, as the complexity of graphics-intensive applications increased, the CPU found itself under strain, leading to a noticeable decline in overall computer performance.

To address this challenge, Graphics Processing Units (GPUs) were developed as a solution to alleviate the burden on CPUs concerning graphics-related tasks. GPUs excel at swiftly and concurrently executing graphics-related calculations, facilitating rapid and seamless rendering of content on computer screens.

By delegating these computations to the GPU, the CPU is liberated to manage other non-graphics-related tasks, thereby optimizing overall system performance.

How Does a GPU Work?

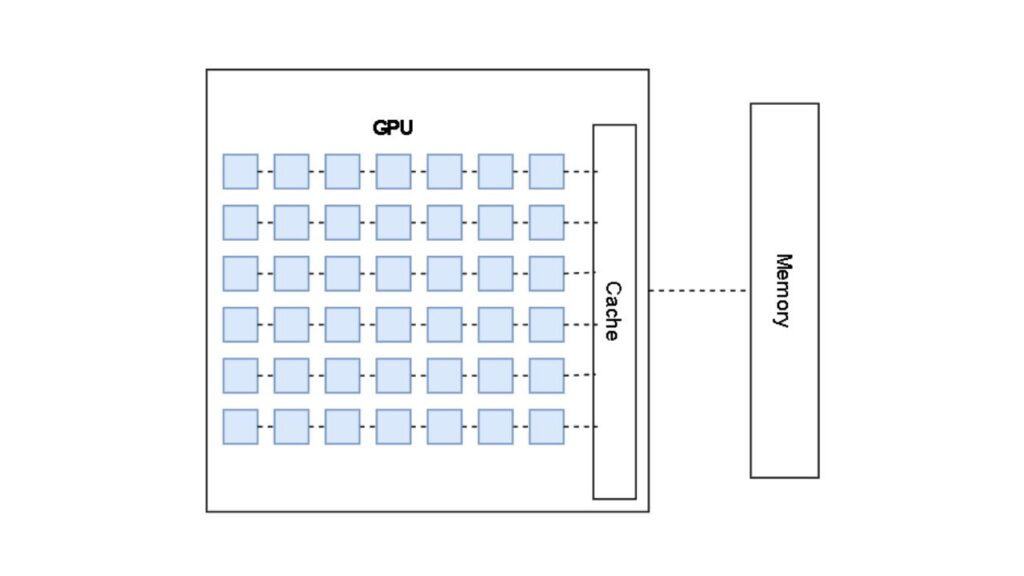

At the heart of every GPU lies thousands of smaller processing cores, each capable of executing multiple instructions simultaneously. These cores work together in parallel to perform the necessary computations required for rendering images or executing other tasks.

Additionally, modern GPUs often include dedicated computer memory and specialized hardware for specific tasks, further enhancing their performance in specialized applications.

GPU Use Cases: What GPUs Are Used for Today

The versatility of GPUs has led to their widespread adoption across various industries and applications. From powering immersive gaming experiences to accelerating scientific research, GPUs play a crucial role in:

- Gaming: Rendering lifelike graphics and enabling real-time gameplay experiences.

- Artificial Intelligence: Training deep learning models and accelerating inferencing tasks.

- Data Science: Processing and analyzing vast datasets to extract insights.

- Computer-Aided Design (CAD): Rendering complex 3D models with precision and speed.

- High-Performance Computing (HPC): Simulating physical phenomena and solving complex equations.

Types of GPUs

There are several types of GPUs available in the market today, ranging from integrated graphics processors found in consumer-grade devices to high-performance discrete GPUs used in gaming PCs and workstations.

Generally, there are two types of GPUs:

Integrated GPUs: An integrated Graphics Processing Unit (GPU) is seamlessly incorporated into the computer’s motherboard or integrated with the CPU. This integration results in systems that are typically smaller and lighter, as less physical space is needed to accommodate the GPU.

The GPU’s inclusion within the system helps minimize overall power consumption, contributing to energy efficiency.

Additionally, integrating the GPU often reduces the device’s manufacturing costs. However, it’s important to note that laptops equipped with integrated GPUs generally lack upgradability. Therefore, if enhanced graphics performance is needed in the future, it may necessitate investing in a completely new device.

In the realm of gaming, modern laptops are now available that meet the stringent requirements of contemporary games, including specific GPU types and processing speeds. These laptops excel at rendering high-quality graphics across various game genres, elevating the gaming experience for enthusiasts.

Discrete GPUs: A discrete or dedicated Graphics Processing Unit (GPU) is typically housed on its circuit board, often in the form of a detachable graphics card. These cards are designed with robust capabilities tailored for demanding, high-performance applications like 3D gaming.

Integrating a discrete GPU into a computer enhances its processing power, providing a significant boost in performance, and offers the flexibility to upgrade as the user’s requirements evolve.

However, it’s worth noting that a discrete GPU consumes more energy compared to an integrated GPU. Additionally, due to the substantial computational load, it generates considerable heat during operation, necessitating dedicated cooling solutions to maintain optimal performance and prevent overheating, particularly in laptops.

What is a Cloud GPU?

In recent years, the rise of cloud computing has led to the emergence of cloud-based GPU services. Cloud GPUs provide users with on-demand access to high-performance graphics capabilities without the need for dedicated hardware.

This enables businesses and individuals to leverage GPUs’ power for various tasks, such as machine learning, data analysis, and graphic-intensive applications, without the upfront investment in expensive hardware.

GPU vs. CPU

While GPUs and CPUs are both vital components of modern computing systems, they excel at different types of tasks. CPUs are optimized for sequential processing and excel at executing a few complex tasks quickly, making them ideal for general-purpose computing tasks and single-threaded applications.

On the other hand, GPUs are optimized for parallel processing and excel at handling multiple tasks simultaneously, making them well-suited for highly parallelizable workloads such as graphics rendering, machine learning, and scientific simulations.

In conclusion, GPUs represent a cornerstone of modern computing, enabling a wide range of applications across various industries. From powering immersive gaming experiences to accelerating scientific research, GPUs’ versatility and performance continue to push the boundaries of what’s possible in the world of computing.

As technology continues to evolve, the role of GPUs will only become more integral, driving innovation and unlocking new possibilities for the future.

Why are GPUs important?

Initially, GPUs were primarily tailored for a singular task: managing image display, as implied by their name. However, over time, they have evolved into powerful tools capable of excelling in general-purpose parallel processing tasks.

Origin of the GPU

Before the advent of the GPU, computing relied on dot matrix screens introduced in the 1940s and 1950s, followed by the development of vector and raster displays. Subsequently, the emergence of the first video game consoles and personal computers marked significant milestones.

During this era, graphics were coordinated by non-programmable devices known as graphics controllers, which often depended on the CPU for processing, though some featured on-chip processors.

Concurrently, a 3D imaging project aimed to generate single pixels on a screen using a solitary processor, with the ultimate objective of producing intricate images swiftly. This endeavor served as the precursor to the modern GPU.

It was in the late 1990s that the first GPUs entered the market, primarily targeting the gaming and computer-aided design (CAD) sectors. These GPUs amalgamated previously software-based rendering engines, transformation engines, and lighting engines onto a single programmable chip, revolutionizing graphics processing.

Evolution of GPU technology

Nvidia made history by introducing the single-chip GeForce 256 GPUs to the market in 1999. The 2000s and 2010s ushered in an era of significant growth for GPUs, marked by the integration of advanced functionalities such as ray tracing, mesh shading, and hardware tessellation. These advancements propelled the field of image generation and graphics performance to unprecedented levels.

In 2007, Nvidia unveiled CUDA, a groundbreaking software layer that enabled parallel processing capabilities on GPUs. This pivotal development underscored GPUs’ effectiveness in executing highly specific tasks, particularly those demanding substantial processing power to achieve precise outcomes.

The introduction of CUDA democratized GPU programming, making it accessible to a broader spectrum of developers. This accessibility empowered developers to harness GPU technology for a myriad of compute-intensive applications, propelling GPU computing into the mainstream.

Today, GPUs are indispensable components sought after for various applications, including blockchain technology and emerging fields. Their utilization extends to artificial intelligence and machine learning (AI/ML), where their exceptional processing capabilities contribute to advancements in these rapidly evolving domains.

GPU and CPU: Working Together

The GPU emerged as a counterpart to its closely related counterpart, the central processing unit (CPU). While CPUs have evolved by incorporating architectural advancements, higher clock speeds, and increased core counts to enhance performance, GPUs are uniquely crafted to accelerate computer graphics tasks.

Understanding the distinct roles of the CPU and GPU can be beneficial when selecting a system, enabling users to effectively maximize the capabilities of both components.

GPU vs. Graphics Card: What’s the Difference?

The primary distinction between a GPU and a graphics card lies in their functionality: a GPU serves as the chip responsible for processing graphics data, whereas a graphics card is a tangible component that encases the GPU along with essential elements like video memory, cooling systems, and power connectors.

Integrated Graphics Processing Unit: — Most GPUs available today are integrated graphics. But what exactly are integrated graphics, and how do they function within your computer? Integrated graphics refer to a CPU that includes a fully integrated GPU on its motherboard. This integration enables thinner and lighter computer systems, lowers power consumption, and reduces overall system costs.

Intel® Graphics Technology, featuring Intel® Iris® Xe graphics, stands at the forefront of integrated graphics technology. With Intel® Graphics, users can enjoy immersive graphics experiences in systems that operate cooler and offer extended battery life.

Discrete Graphics Processing Unit: –Integrated GPUs suffice for many computing applications, yet for resource-intensive tasks with high-performance requirements, a discrete GPU (also known as a dedicated graphics card) proves more adept.

Discrete GPUs augment processing power, albeit at the expense of increased energy consumption and heat generation. To ensure optimal performance, discrete GPUs typically necessitate dedicated cooling solutions.

Usage-Specific GPU

Most GPUs are designed for a specific use of real-time 3D graphics or other mass calculations:

1. Gaming

- GeForce GTX, RTX

- Radeon VII

- Nvidia Titan

- Intel Arc

- Radeon HD, R5, R7, R9, RX, Vega and Navi series

2. Cloud Gaming

- Nvidia GRID

- Radeon Sky

3. Workstation

- Nvidia Quadro

- Nvidia RTX

- AMD FirePro

- AMD Radeon Pro

- Intel Arc Pro

4. Cloud Workstation

- Nvidia Tesla

- AMD FireStream

5. Artificial Intelligence training and Cloud

- Nvidia Tesla

- AMD Radeon Instinct

6. Automated/Driverless car

- Nvidia Drive PX